Artificial intelligence (AI) has the potential to significantly disrupt industries and societies. Recognizing this, the regulation of AI has become a pressing topic of discussion for governments, regulatory bodies, policymakers, and civil society organizations.

There is a need to understand and address the risks and ethical considerations associated with the use of AI. Governments are moving quickly to develop regulations and new laws to control the widespread adoption of AI and ensure it is safe and trustworthy for their citizens.

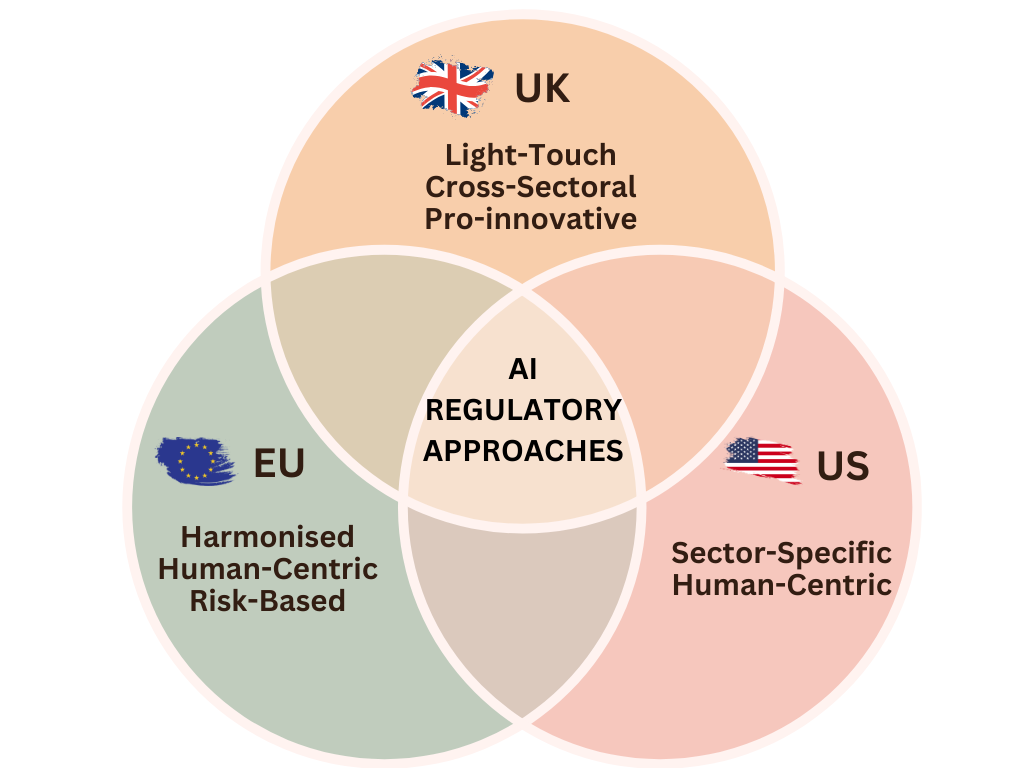

In this blog, we will outline how the regulatory approaches to AI are taking shape in the United Kingdom (UK), the European Union (EU), the United States (US) and international efforts.

Regulating AI in the UK

The UK government has taken a light-touch, technology-neutral, and cross-sectoral approach to AI regulation. This approach has been expressed through the introduction of white papers, policy papers, guidance, as well as work to develop sector-specific regulations. In particular, the AI white paper from the Department for Science, Innovation and Technology (DSIT), ‘A pro-innovation approach to AI regulation’, published a year ago in March 2023, established a set of principles for UK regulators to consider in underpinning the use of AI in the UK. The government’s strategy is to empower sector regulators to develop tailored approaches to controlling AI which promote flexibility and adaptability, whilst allowing AI governance to align with the patterns of use in different industries.

Responsibly for AI regulation affecting firms in payments and e-money, will fall to:

The UK government has emphasised cooperation among existing regulators. The Digital Regulation Cooperation Forum (DRCF), formed in 2020, serves as a forum to bring together four key regulators.

The forum aims to establish a cohesive regulatory framework for digital services, benefiting the public and businesses operating online.

Initiatives within the DRCF are themes around its objectives to bring coherence, collaboration and capability to the UK’s digital landscape and are set around an annual work plan. The DRCF consulted in November on ideas for its 2024/25 work plan, and this is expected shortly. The forum’s remit is the whole digital services landscape , but with AI expected to have a significant impact across many areas, it is likely to become an important focus of the coming year.

In November 2023, the AI Safety Summit was held in the UK as a major global event. The summit focused on the responsibility of developers for proactively ensuring the safety of AI-based systems.

A subsequent AI safety event will be hosted by S. Korea in Q2 2024 and a full summit is set to be held in France later in the year. At the summit, the UK government also announced it was upgrading an initiative to develop approaches to testing AI to put the work on a longer-term basis by the launching of the world’s first AI Safety Institute

The EU approach

The EU has adopted a harmonised, human-centric, and risk-based approach to AI regulation. Reflecting its centralised approach, the EU first proposed the EU AI Act in 2021, representing the world’s first comprehensive regulatory framework for AI. The EU AI Act divides AI technologies into categories of risk. These range from ‘unacceptable’ uses which will be banned to high, medium and low-risk applications, with proportionate obligations placed on developers to identify and control risks in each category.

While the final text is not yet issued, political agreement was reached by the European Parliament and Council in December 2023. Most recently, The EU parliament approved the world’s first major set of regulatory framework to govern the AI applications in March 2024.

The EU Act is expected to enter into force at the end of the legislature in May, after passing final checks and receiving endorsement from the European Council. Implementing the Act’s provisions will then be staggered from 2025 onward.

The extraterritorial reach of the EU AI Act, makes it important for developers and businesses based in the UK and US to consider how the Act’s enforcement may come to impact their use of AI if they access the EU single market.

The European Commission’s Directorate-General for Communications Networks, Content and Technology (DG CONNECT) – Responsible for digital and technology policy, including AI

With in DG CONNECT, the newly established European AI Office is set to play a key role in implementing the AI Act by supporting the national competent authorities in each Member State.

The European AI Office will promote the development and adoption of trustworthy AI, and aim to mitigate against the risks which poorly conceived or under tested AI applications could pose to the rights of EU citizens. Ultimately, the Office will be the mechanism by which the Commission exercises the powers granted by the AI act, including the ability to examine and if necessary sanction developers of general AI models.

The US Approach

The US approach to regulating AI centres around a sector-specific and human-centric perspective. There is a focus on protecting citizens’ rights throughout the development and deployment of AI technologies. While there is no comprehensive federal AI law in the US at present, initiatives have emerged at both the federal and state levels.

The Federal Trade Commission (FTC) has been actively involved in addressing AI-related concerns, focusing on issues like bias, discrimination, and deceptive practices. Individual states, such as California, Washington, Illinois and New York, have implemented or proposed legislation addressing aspects of AI, including the use of facial recognition technology and algorithmic accountability.

There have also been ongoing discussions in the US about federal legislation to provide a unified approach to AI regulation. However, reaching a consensus on the specifics of AI regulation at the federal level remains a complex challenge. Different stakeholders have opposing perspectives on the level of regulation that is desirable and the balance to be struck between promoting innovation and mitigating risk.

International efforts

Beyond work at the level of national or economic blocks, the UN General Assembly has recently adopted a resolution on “Seizing the opportunities of safe, secure and trustworthy artificial intelligence systems for sustainable development” (March 2024). The resolution is not binding, but was backed by more than 120 countries, including the UK and the US. Its intent is broadly framed as ensuring AI development is sustainable, and does not widen the digital divide between groups or impact on established human rights.

Complementing internal efforts at a non-governmental level, the International Organization for Standardization (ISO) has published standards on the management and control of risks in AI deployments (ISO/IEC 42001, ISO/IEC 23894:2023). These standards provide a set of established best practices that companies can follow to demonstrate that they are responsibly developing and deploying AI technology.

Summary

The UK, EU, and US are each rapidly developing their own approaches to AI regulation. As these efforts progress in parallel, differences in the regulatory frameworks are emerging. As AI technology continues to evolve and societal concerns about its impact change over time, these regulatory approaches will likely be further extended and refined. To capitalize on the benefits of AI, businesses must stay informed about the evolving regulatory requirements and standards. This will enable them to properly assess their compliance needs and manage the impact of changing AI regulations on their future plans.

Posted: 27 March 2023

Want to comment or have questions? You can contact the AI team at Flawless via:

Disclaimer: The information provided in this blog post is for general informational purposes only and does not constitute advice, legal or otherwise.